Tag: GPU memory

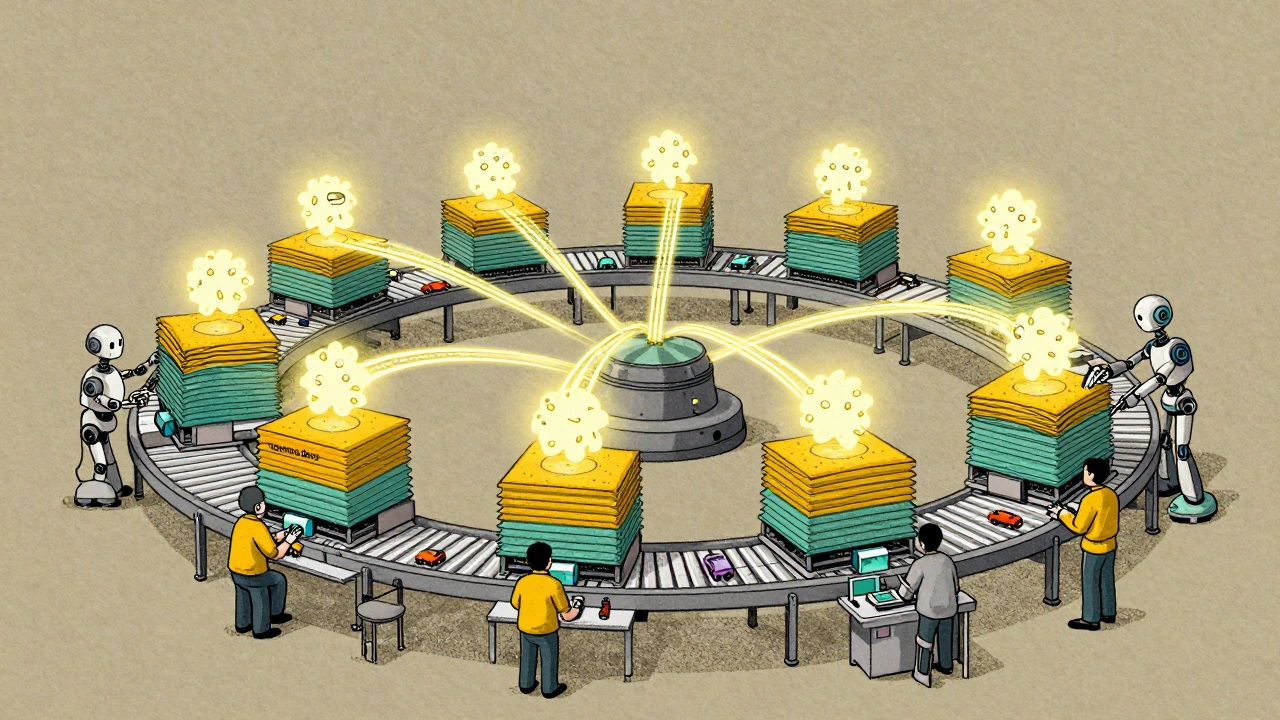

Model Parallelism and Pipeline Parallelism in Large Generative AI Training

Model and pipeline parallelism enable training of massive generative AI models by splitting them across multiple GPUs. Learn how these techniques overcome GPU memory limits and power models like GPT-3 and Claude 2.

Read More