GPU Autoscaling: Cut AI Costs and Boost Performance with Smart Resource Management

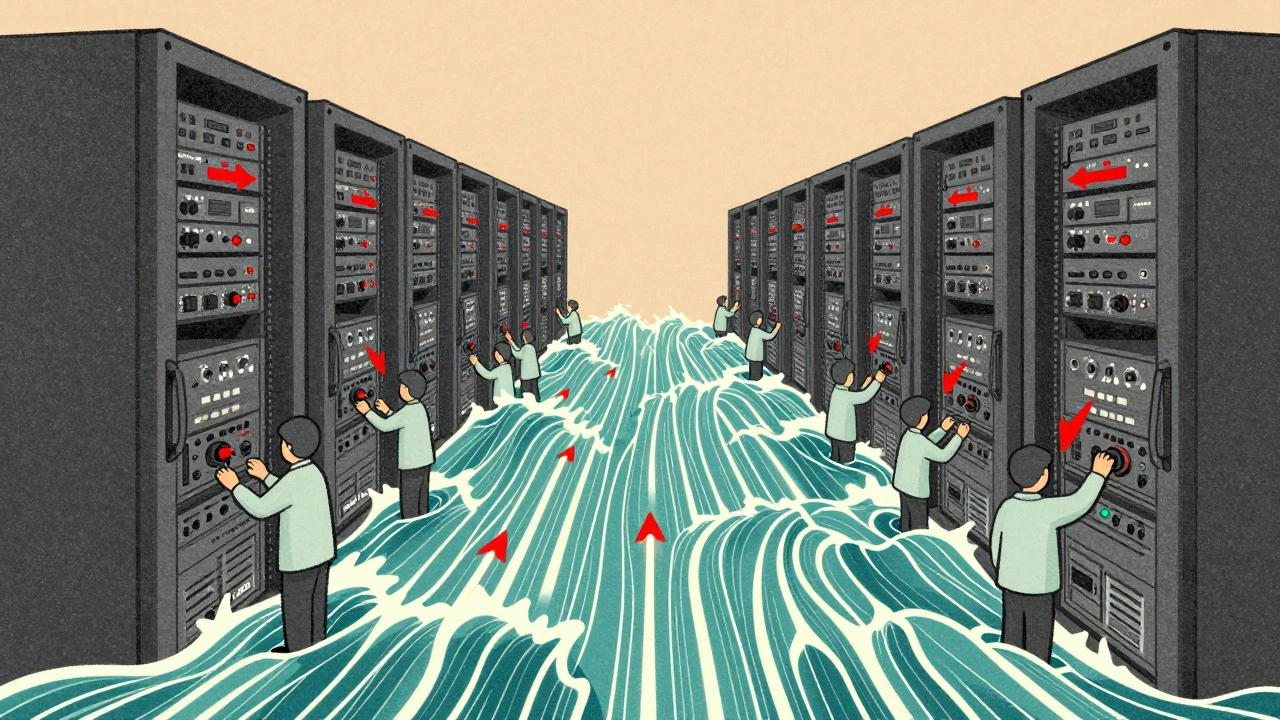

When you run large language models or train AI systems, GPU autoscaling, the automatic adjustment of GPU resources based on real-time workload demands. It's not just about having more power—it's about having the right amount at the right time. Most teams waste money running idle GPUs overnight or over-provisioning for peak traffic that only happens a few hours a week. GPU autoscaling fixes that by turning resources up when needed and down when they’re not, cutting cloud bills by up to 60% without touching performance.

This isn’t theoretical. Companies using GPU autoscaling with tools like Kubernetes, AWS SageMaker, or Google Cloud Run have seen their AI inference costs drop while handling 3x more requests during traffic spikes. It works hand-in-hand with spot instances, low-cost, interruptible cloud GPUs that are perfect for non-critical training jobs and AI scheduling, timing jobs to run during off-peak hours when cloud pricing is lowest. Together, these form the backbone of cost-efficient AI operations. You don’t need to be a cloud expert to use them—just smart about how you configure triggers, cooldown periods, and minimum capacity.

What makes GPU autoscaling different from simple load balancing? It’s predictive. It doesn’t just react to current usage—it learns from patterns. If your chatbot gets busy every weekday at 9 a.m., it ramps up before the rush. If your model training runs every Sunday at midnight, it spins down by Monday morning. This kind of intelligence reduces latency, avoids bottlenecks, and keeps your users happy. And when you combine it with distributed training, you can scale across dozens or even hundreds of GPUs without manual intervention.

But it’s not just about saving money. It’s about sustainability. Every GPU you don’t run is less energy wasted, fewer carbon emissions, and lower operational overhead. Teams that treat GPU usage like a variable cost—not a fixed one—gain real flexibility. They can experiment more, deploy faster, and respond to user demand without begging for budget approvals.

Below, you’ll find real-world guides on how to set this up in practice—from configuring autoscaling rules in AWS to optimizing batch jobs for cost and speed. You’ll see how companies use scheduling, spot instances, and usage analytics to keep their AI running lean. No fluff. No theory. Just what works.

Autoscaling Large Language Model Services: Policies, Signals, and Costs

Learn how to autoscale LLM services effectively using prefill queue size, slots_used, and HBM usage. Reduce costs by up to 60% while keeping latency low with proven policies and real-world benchmarks.

Read More