Global Trade Regulations and AI: How Compliance Shapes AI Deployment

When you deploy a large language model that processes user data from Europe, Asia, or Latin America, you’re not just running code—you’re navigating global trade regulations, national laws that control how data, software, and AI systems cross borders. Also known as international data governance, these rules dictate what AI models can access, where they can run, and who can use them. This isn’t theoretical. California’s AI laws, the EU’s AI Act, and export controls from the U.S. Department of Commerce already block certain AI models from being shipped to specific countries. If your PHP app uses OpenAI or another LLM API and handles customer data from multiple regions, you’re already in the crosshairs of these rules.

These regulations don’t just affect where you host your servers—they force you to rethink how your AI system works. For example, data privacy, the legal right of individuals to control how their personal information is used. Also known as personal data protection, it requires you to know exactly what training data your LLM was exposed to. If your model was trained on scraped public data that includes EU citizen records, you could be violating GDPR—even if you never stored that data yourself. That’s why enterprise LLM deployment, the process of putting large language models into production systems with proper controls and monitoring. Also known as AI productionization, it now includes legal audits, data lineage tracking, and compliance checklists. Tools like Microsoft Purview and Databricks aren’t just for IT—they’re now legal necessities.

And it’s not just about privacy. Export controls restrict which AI technologies can leave the U.S. or other regulated countries. Some LLMs with advanced reasoning capabilities are classified as dual-use technologies—meaning they can be used for civilian purposes but also for cyberwarfare or surveillance. If your PHP script integrates an AI model that can generate code, analyze financial reports, or interpret medical records, you might need a license to deploy it outside your country. This isn’t science fiction. It’s happening right now, and it’s forcing developers to build systems that can switch models based on user location, filter inputs, or even shut down features in high-risk regions.

What you’ll find in this collection isn’t a list of legal jargon. It’s a practical guide for developers who need to ship AI-powered apps without getting fined or shut down. You’ll see how to measure policy adherence, secure your model supply chain, and design systems that respect cross-border data flow rules—all while keeping performance high and costs low. These aren’t theory papers. These are real strategies used by teams running AI in regulated industries like finance, healthcare, and logistics. You don’t need to be a lawyer to get this right. You just need to know what to look for—and what to build.

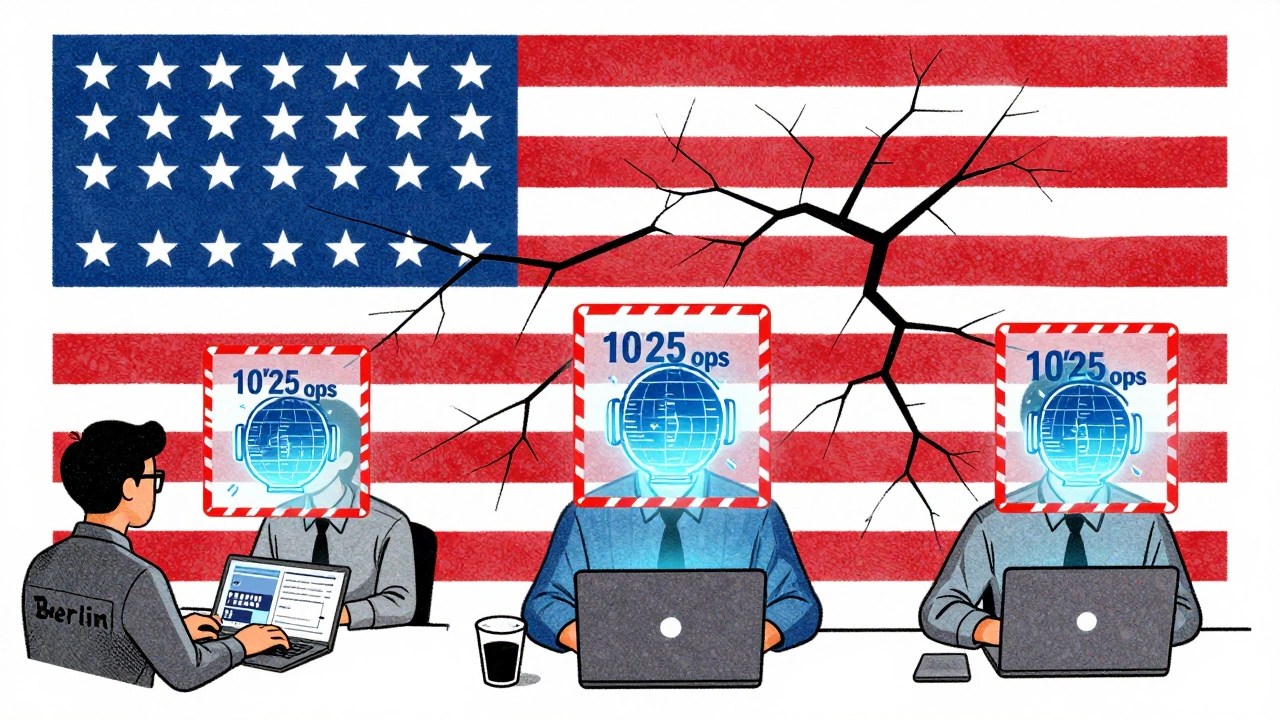

Export Controls and AI Models: How Global Teams Stay Compliant in 2025

In 2025, exporting AI models is tightly regulated. Global teams must understand thresholds, deemed exports, and compliance tools to avoid fines and keep operations running smoothly.

Read More