Export Controls in AI: Compliance, Risk, and Legal Limits for Generative Systems

When you deploy a generative AI, a system that creates text, images, or code using machine learning models. Also known as large language models, it can be powerful—but sharing it across borders may break the law. Export controls aren’t just for military tech anymore. They now cover AI models, training data, and even the code that runs them. If your PHP app uses OpenAI, Mistral, or a custom LLM, and users outside the U.S. access it, you’re likely subject to these rules.

Export controls tie directly to AI compliance, the set of legal and ethical standards that govern how AI systems are built, used, and shared. The U.S. Department of Commerce’s Bureau of Industry and Security (BIS) classifies advanced AI models as dual-use technologies. That means even open-source LLMs can be restricted if they’re powerful enough. Countries like Canada, the EU, and Australia have their own versions. And it’s not just about sending code overseas. Hosting an AI service on AWS in Ireland? That’s an export too. LLM deployment, the process of putting a trained AI model into production must now include legal checks—not just performance benchmarks.

These rules aren’t theoretical. California’s AI transparency law, Colorado’s deepfake rules, and Illinois’ biometric privacy laws all intersect with export controls. If your app uses user data to fine-tune a model, and that data came from the EU, you’re handling personal information under GDPR. If your model was trained on data scraped from Chinese websites, you might violate China’s data security laws. Data privacy, the protection of personal or sensitive information from unauthorized access or exposure isn’t optional—it’s a legal requirement that affects how you package and deliver AI.

What does this mean for you as a developer? You can’t just plug in an API and call it done. You need to track where your model runs, who uses it, and what data flows through it. Tools like Microsoft Purview and Databricks help with governance, but they don’t solve export issues. You need to ask: Is this model on a restricted list? Are users in sanctioned countries accessing it? Are you storing training data in a country with strict export laws? The answers determine your risk—not your accuracy score.

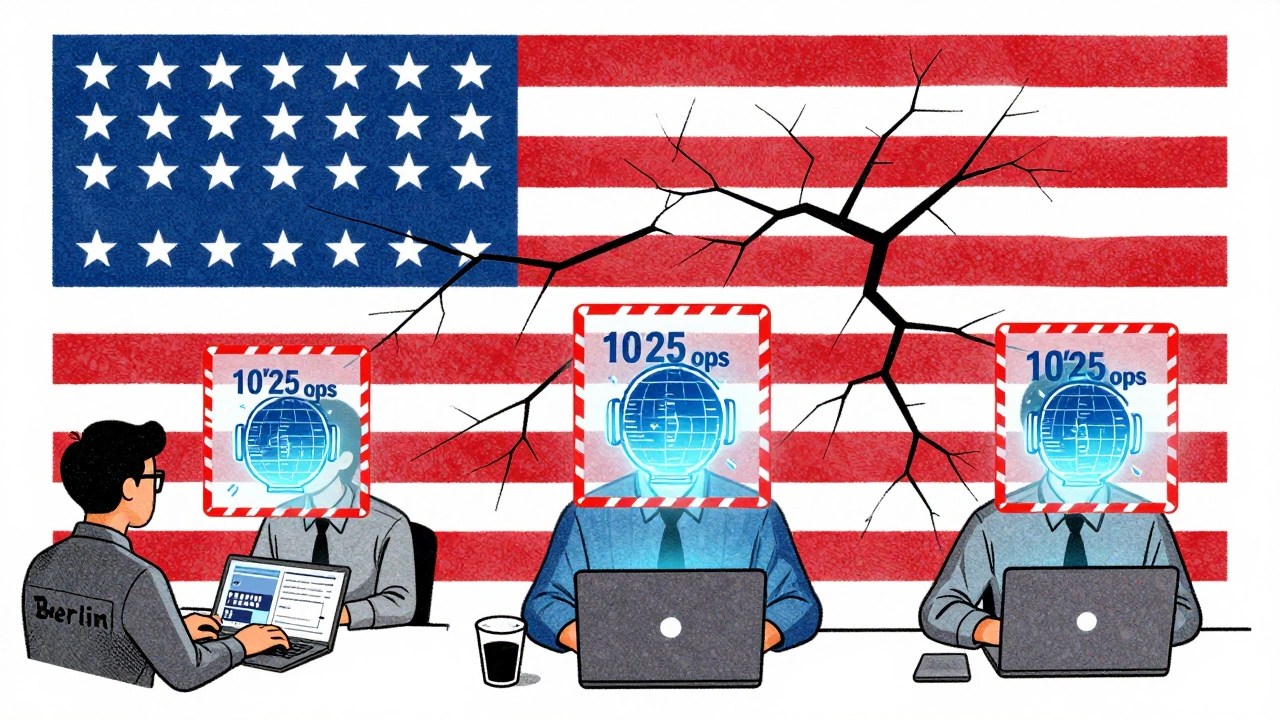

Many teams ignore this until they get a letter from a regulator. Others assume open-source means free to use anywhere. It doesn’t. A model trained on U.S. data and hosted in Germany might still be subject to U.S. export rules. And if you’re using Composer packages to pull in AI libraries, those dependencies might be flagged. Supply chain security for LLMs isn’t just about malware—it’s about legal compliance.

Below, you’ll find real guides on how state laws affect AI, how to measure governance KPIs, how to secure model weights, and how to calculate risk-adjusted ROI. These aren’t theory pieces. They’re checklists, benchmarks, and legal maps from teams who’ve been audited, fined, or blocked. If you’re building AI in PHP, you need to know this stuff. Because the code works—but the law doesn’t care about your code.

Export Controls and AI Models: How Global Teams Stay Compliant in 2025

In 2025, exporting AI models is tightly regulated. Global teams must understand thresholds, deemed exports, and compliance tools to avoid fines and keep operations running smoothly.

Read More