Data Residency: Where Your AI Data Lives and Why It Matters

When you use AI tools like chatbots or content generators, your data doesn’t just disappear into the cloud—it lands somewhere specific. This is data residency, the legal and physical location where data is stored and processed. Also known as data sovereignty, it determines which laws apply to your data—and who can access it. If your users are in Europe, your data might need to stay in the EU under GDPR. If you’re in California, state laws like the CPRA may require you to track where training data comes from. Ignoring data residency isn’t just risky—it can shut down your AI app overnight.

It’s not just about where you store data. It’s about where it’s processed, trained, and even temporarily cached. enterprise data governance, the framework for managing data use, access, and compliance across teams is what makes this manageable. Tools like Microsoft Purview or Databricks help you map data flows, tag sensitive info, and enforce rules. But governance alone won’t fix it if your LLM provider sends your customer emails to a server in a country with no privacy laws. That’s why AI data privacy, the practice of protecting user data during AI processing must be built into your architecture, not bolted on later.

Many developers think data residency is just a legal headache. But it’s also a performance issue. Models trained on local data often understand regional language, slang, and context better. If your users are in Japan and your model only trained on U.S. data, your chatbot will miss the mark. And when you’re scaling, data residency affects cost. Moving data across borders can trigger compliance reviews, slow down inference, or force you to pay for extra infrastructure. Some companies now run separate AI instances per region—not because they want to, but because they have to.

It’s getting harder to ignore. In 2025, generative AI laws, regulations that control how AI systems handle personal data and generate content are popping up everywhere—not just in the EU and U.S., but in Canada, Brazil, and even parts of Southeast Asia. California requires transparency about training data sources. Colorado bans deepfakes in political ads. Illinois restricts biometric data use. If your AI touches any of these regions, you need to know where your data lives, who trained your model, and what rules apply.

This collection of posts doesn’t just talk about data residency in theory. It shows you how real teams are handling it: how they enforce isolation in multi-tenant apps, how they audit model training data, how they use confidential computing to protect data during inference, and how they avoid fines by mapping every data flow. You’ll see what works, what backfires, and how to build systems that stay compliant without slowing down innovation.

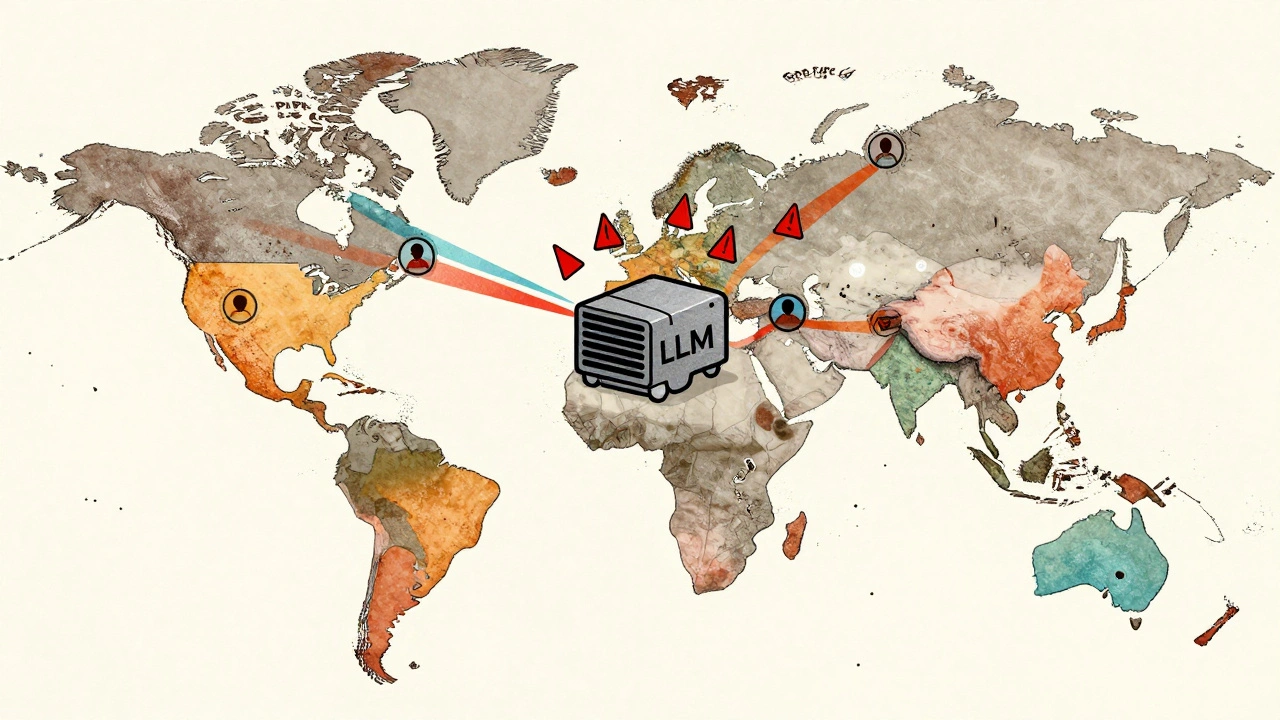

Data Residency Considerations for Global LLM Deployments

Data residency rules for global LLM deployments vary by country and can lead to heavy fines if ignored. Learn how to legally deploy AI models across borders without violating privacy laws like GDPR, PIPL, or LGPD.

Read More