Corpus Composition: How Data Shapes AI Learning and Accuracy

When you hear about corpus composition, the selection, structure, and balance of text data used to train AI models. Also known as training data curation, it's the hidden foundation behind every chatbot, summarizer, and translator you use. It’s not just about having lots of text—it’s about having the right kind. A poorly built corpus can make even the most advanced model spit out nonsense, repeat biases, or fail on simple tasks. Companies that skip careful corpus design think they’re saving time. They’re actually building a house on sand.

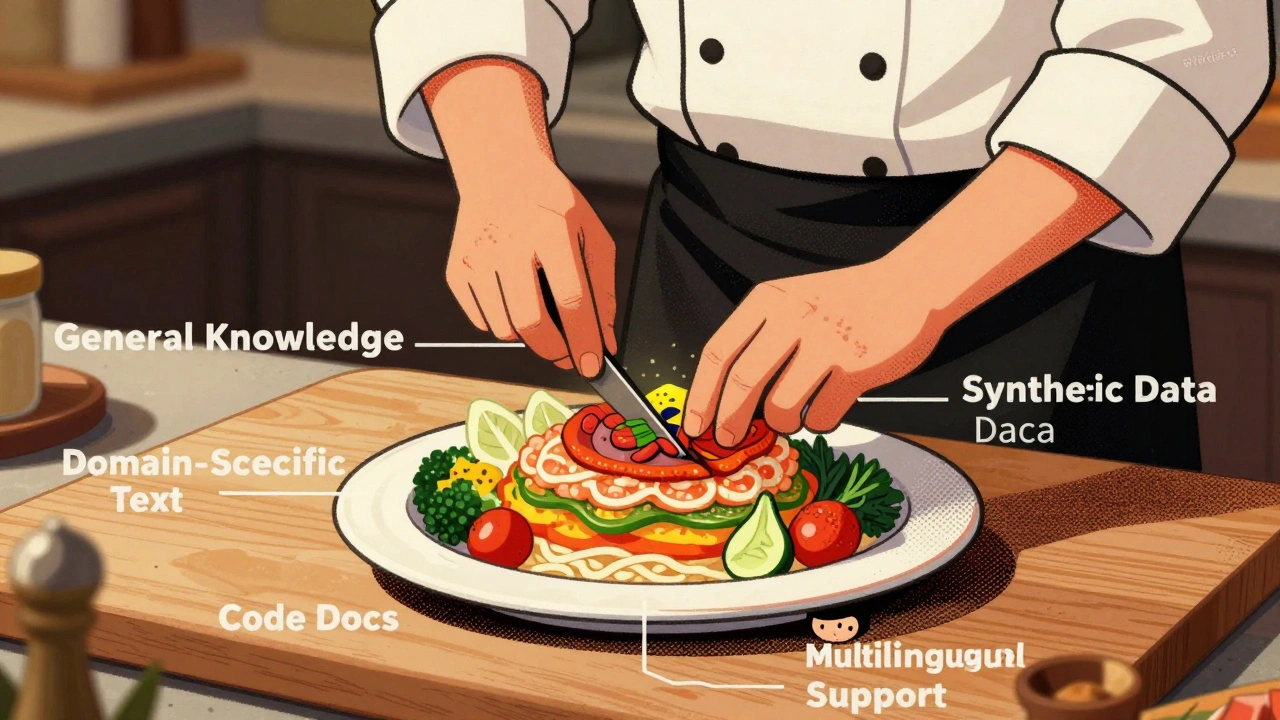

Think of training data, the raw text used to teach AI how language works like ingredients in a recipe. If you only feed an AI news articles, it won’t understand casual chat. If you use mostly forum posts, it might pick up slang and errors. The best models use a mix: books for grammar, scientific papers for precision, customer service logs for real-world phrasing, and even code comments for technical context. data quality, how clean, accurate, and representative the training text is matters more than quantity. One clean, well-labeled dataset beats ten messy ones. That’s why tools like Microsoft Purview and Databricks are now part of AI workflows—they don’t just store data, they audit it.

Corpus composition also controls large language models, AI systems trained on massive text datasets to generate human-like responses’s ability to stay truthful. If your corpus includes outdated medical info or biased opinions, the model will learn them as facts. TruthfulQA benchmarks show even top models get fooled by skewed data. That’s why modern teams track source origins, remove duplicates, filter toxic content, and balance demographics. It’s not just ethics—it’s performance. A model trained on a diverse, verified corpus answers correctly more often, reduces hallucinations, and earns user trust.

You’ll find posts here that dig into how companies build these datasets, what happens when they get it wrong, and how tools like RAG and retrieval-augmented generation help fix gaps after training. Some posts show how to audit your own data for bias. Others break down how GPU scaling affects training speed when you’re working with massive corpora. There’s even a guide on how export controls and state laws in California and Colorado now legally require you to document your corpus sources. This isn’t theory—it’s what’s happening in production right now. Whether you’re scaling an AI app or just trying to understand why your chatbot keeps giving weird answers, the answers start with the data it learned from.

How to Build a Domain-Aware LLM: The Right Pretraining Corpus Composition

Learn how to build domain-aware LLMs by strategically composing pretraining corpora with the right mix of data types, ratios, and preprocessing techniques to boost accuracy while reducing costs.

Read More