Content Safety in AI: Protecting Users, Data, and Compliance

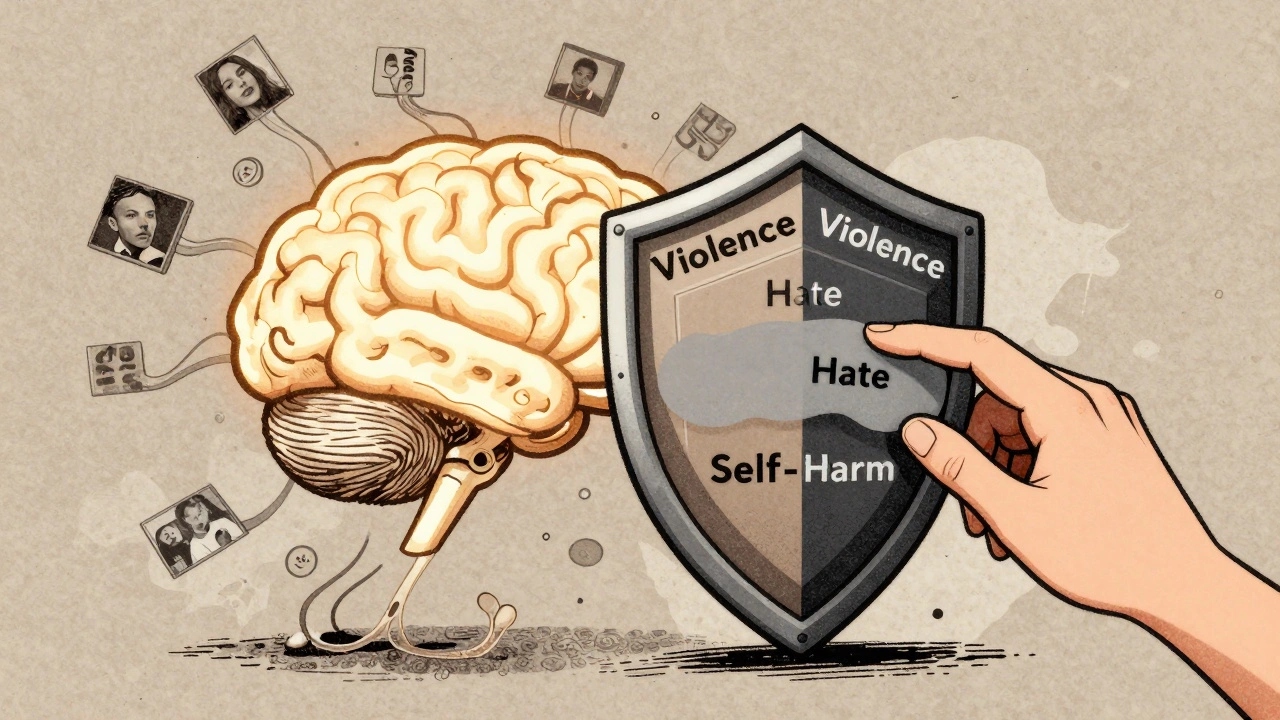

When we talk about content safety, the systems and practices that prevent harmful, misleading, or illegal AI-generated output. Also known as AI safety, it's what keeps your users from seeing hate speech, fake medical advice, or leaked private data—no matter how convincing the AI sounds. It’s not just filters or keyword blocks. Real content safety means understanding how models hallucinate, how training data leaks, and how users can be manipulated—even when the AI doesn’t mean to cause harm.

Take LLM governance, the framework of policies, audits, and controls that ensure large language models are used responsibly. Without it, even the most accurate AI can break laws. For example, a model trained on scraped medical forums might give dangerous advice, or a customer service bot could accidentally reveal a user’s credit card history. That’s why companies use tools like Microsoft Purview or Databricks to track data lineage and enforce access rules. And it’s not just about compliance—it’s about trust. If users think your AI is unreliable or unsafe, they’ll walk away, no matter how smart it is.

AI hallucinations, when AI confidently generates false information that sounds true are one of the biggest threats to content safety. TruthfulQA benchmarks show even top models get it wrong 30% of the time. That’s not a bug—it’s a feature of how they work. Fixing it isn’t about better prompts. It’s about grounding responses in your own verified data using RAG, adding human review layers, and setting up real-time monitoring. And when you’re deploying AI across borders, you’re not just fighting hallucinations—you’re fighting legal risks. State laws in California and Illinois now require disclosure of AI-generated content, especially for deepfakes or insurance-related claims. Ignoring that isn’t risky—it’s illegal.

And then there’s generative AI compliance, the ongoing process of aligning AI use with legal, ethical, and business standards. This isn’t a one-time audit. It’s continuous. You need to monitor usage patterns—because high token consumption can mean more exposure to risky outputs. You need to secure your model weights and dependencies, because a compromised container can leak data across tenants. You need to measure policy adherence and MTTR—not just to check boxes, but to keep your system from breaking under pressure.

What you’ll find here aren’t theory papers or vendor brochures. These are real guides from developers who’ve been burned by unsafe AI outputs. They show how to build guardrails that actually work, how to measure risk-adjusted ROI, and how to avoid the 86% of AI projects that fail in production because no one thought about safety until it was too late.

Content Moderation for Generative AI: How Safety Classifiers and Redaction Keep Outputs Safe

Learn how safety classifiers and redaction techniques prevent harmful content in generative AI outputs. Explore real-world tools, accuracy rates, and best practices for responsible AI deployment.

Read More