AI Redaction: Securely Remove Sensitive Data from AI-Generated Content

When you use AI redaction, the process of automatically identifying and removing personally identifiable or confidential information from text generated by large language models. Also known as automated data masking, it’s not just a nice-to-have—it’s a legal requirement in many industries. If your app generates customer support replies, medical summaries, or financial reports using AI, you’re likely spitting out names, addresses, SSNs, or health records without realizing it. And if that data gets leaked, you’re on the hook—not the AI.

Data privacy, the practice of protecting personal information from unauthorized access or exposure is the backbone of AI redaction. Regulations like GDPR, HIPAA, and state laws in California and Colorado don’t care if the leak came from a human or a model. They care that the data was exposed. That’s why redaction isn’t just about deleting words—it’s about understanding context. A model might say "John Smith’s card ended in 4567" and you think you’re safe because you removed "John Smith." But what if "4567" is enough to re-identify someone when paired with other public data? True redaction needs pattern recognition, entity classification, and sometimes even semantic understanding.

LLM security, the set of practices that protect large language models from data leakage, prompt injection, and output exposure goes hand-in-hand with redaction. You can train a model on clean data, lock down its API, and still end up with exposed info in its responses. That’s why redaction often sits at the final output layer—like a filter between the AI and your users. Tools like confidential computing and encryption-in-use help protect the model during inference, but they don’t fix bad output. Redaction does.

And it’s not just about legal risk. Customers won’t trust your AI if they think it’s casually sharing their data. Imagine a chatbot replying to a user: "Your last appointment was with Dr. Lee on May 3. We’ve updated your file." That’s not helpful—that’s a violation. Redaction catches that before it ever leaves your system.

What you’ll find in this collection aren’t theory papers or vendor brochures. These are real, battle-tested approaches from developers who’ve had to fix broken redaction pipelines under pressure. You’ll see how teams use rule-based filters, machine learning classifiers, and hybrid systems to catch PII in messy, unstructured AI output. You’ll learn how to test redaction accuracy with real datasets, how to avoid false negatives that slip through, and why some "off-the-shelf" tools fail in production. You’ll also find guides on integrating redaction into existing LLM workflows—whether you’re using LangChain, LiteLLM, or raw API calls.

There’s no magic bullet. But there are proven patterns. And if you’re building anything that touches human data, you need to know them.

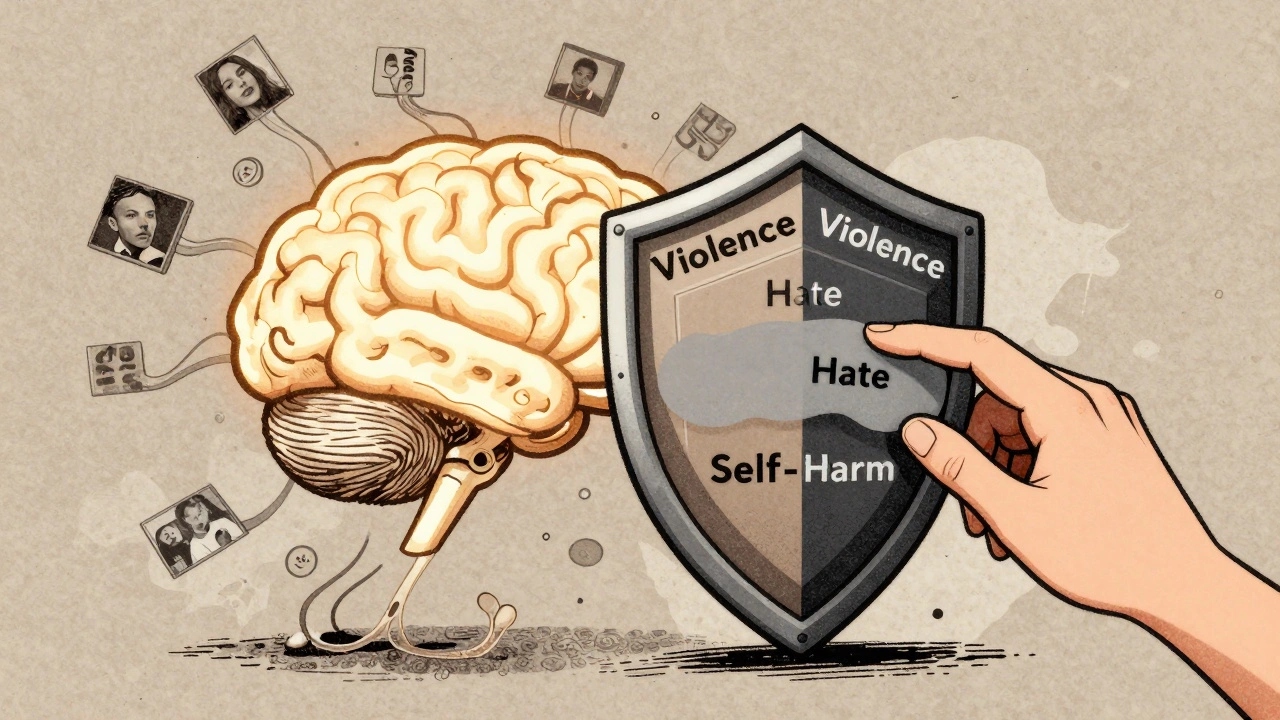

Content Moderation for Generative AI: How Safety Classifiers and Redaction Keep Outputs Safe

Learn how safety classifiers and redaction techniques prevent harmful content in generative AI outputs. Explore real-world tools, accuracy rates, and best practices for responsible AI deployment.

Read More