Review Coverage for AI Scripts: What Works, What Doesn't, and Why It Matters

When you hear review coverage, the systematic evaluation of AI tools and systems to understand real-world performance, risks, and value. Also known as AI validation, it's not about marketing claims—it's about what happens when code meets data, users, and regulations. Most people think AI scripts just work if they generate text or respond to prompts. But that’s like saying a car runs because the engine turns over. The real question is: does it stay on the road? Does it handle rain? Does it break down when you need it most?

True review coverage, the systematic evaluation of AI tools and systems to understand real-world performance, risks, and value. Also known as AI validation, it's not about marketing claims—it's about what happens when code meets data, users, and regulations. means checking if your LLM actually knows what it’s talking about. Tools like TruthfulQA, a benchmark that tests whether AI models avoid generating false or misleading answers show even top models lie in 30% of cases. That’s not a bug—it’s a feature of how they’re trained. And if you’re using one in customer service, HR, or healthcare? You’re not just risking bad answers—you’re risking lawsuits, lost trust, and brand damage.

Then there’s cost. You can’t just run a big model 24/7 and call it a day. LLM autoscaling, the automated adjustment of computing resources based on real-time demand to balance performance and cost isn’t optional anymore. Companies that don’t use it pay 3x more than they need to. And if you’re not tracking token pricing, the cost per unit of text processed by an AI model, which directly affects operational expenses, you’re flying blind. One client thought their AI was cheap—until they saw their AWS bill after 30 days of unmonitored usage. It wasn’t a spike. It was a volcano.

And what about security? You’re not just protecting data—you’re protecting your entire supply chain. Model weights, containers, dependencies—they’re all attack surfaces. LLM supply chain security, the practice of securing AI models and their dependencies from tampering, injection, or unauthorized access isn’t a buzzword. It’s a checklist: signed models, SBOMs, automated scans. Skip it, and you’re inviting a breach you didn’t even know was possible.

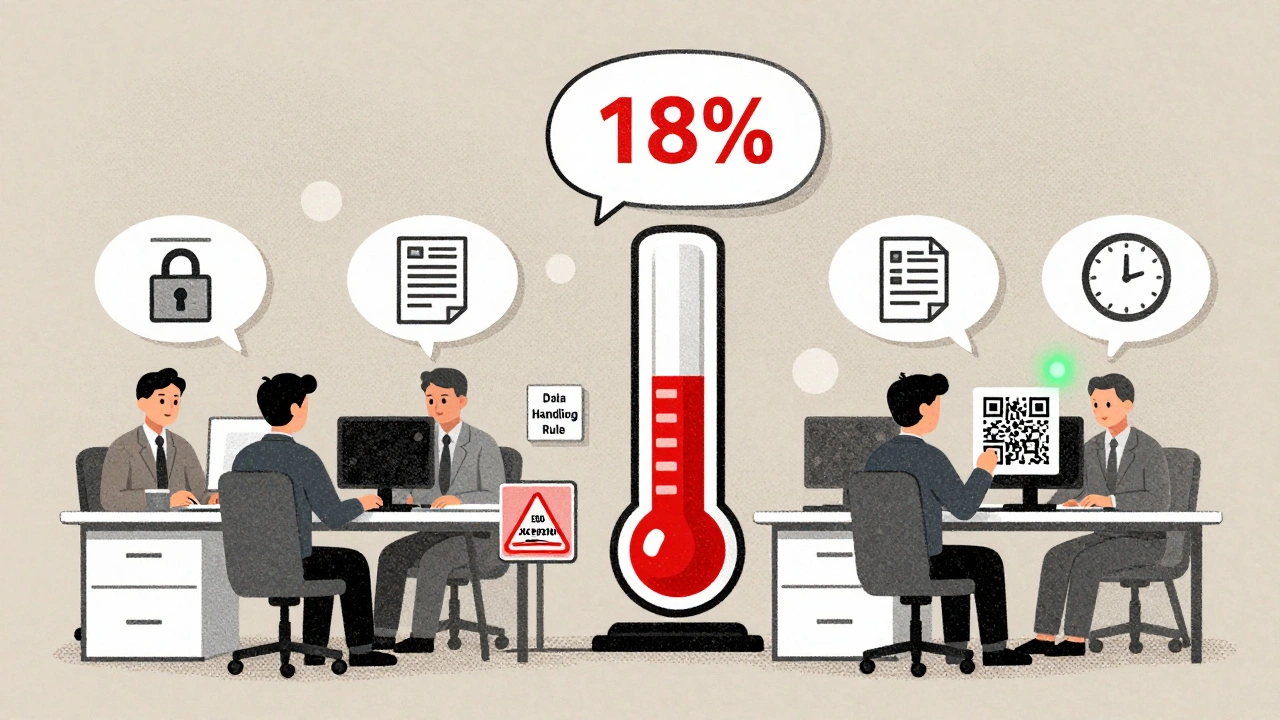

Review coverage isn’t about picking the fanciest AI script. It’s about knowing which ones survive real use. It’s about understanding how enterprise data governance, the framework for managing data used in AI systems to ensure compliance, privacy, and ethical use affects your model’s output. It’s about realizing that generative AI ethics, the principles guiding responsible design, deployment, and oversight of AI systems that generate content isn’t a HR policy—it’s a legal requirement in California, Colorado, and more states every year.

What you’ll find below isn’t a list of "best" tools. It’s a collection of deep dives into what actually matters: how multi-head attention affects accuracy, why function calling reduces hallucinations, how RAG beats fine-tuning in real applications, and why vibe coding can save weeks—or wreck your codebase if misused. Every post here was chosen because it answers a question someone got burned by. No fluff. No hype. Just what you need to know before you deploy.

KPIs for Governance: How to Measure Policy Adherence, Review Coverage, and MTTR

Learn how to measure governance effectiveness with policy adherence, review coverage, and MTTR-three critical KPIs that turn compliance into real business resilience.

Read More