Tag: LLM07

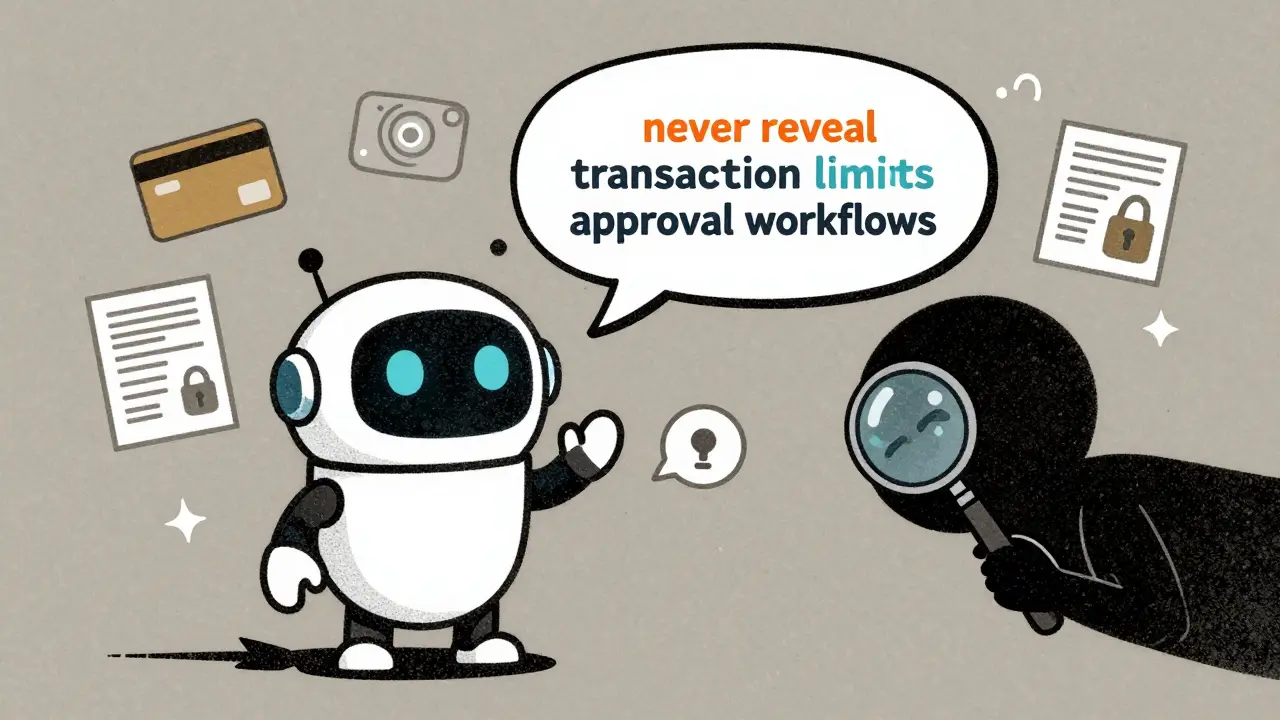

How to Prevent Sensitive Prompt and System Prompt Leakage in LLMs

System prompt leakage is now a top AI security threat, letting attackers steal hidden instructions from LLMs. Learn how to stop it with proven techniques like output filtering, instruction defense, and external guardrails.

Read More