LLM Scaling Policies: How to Manage Cost, Performance, and Compliance as AI Grows

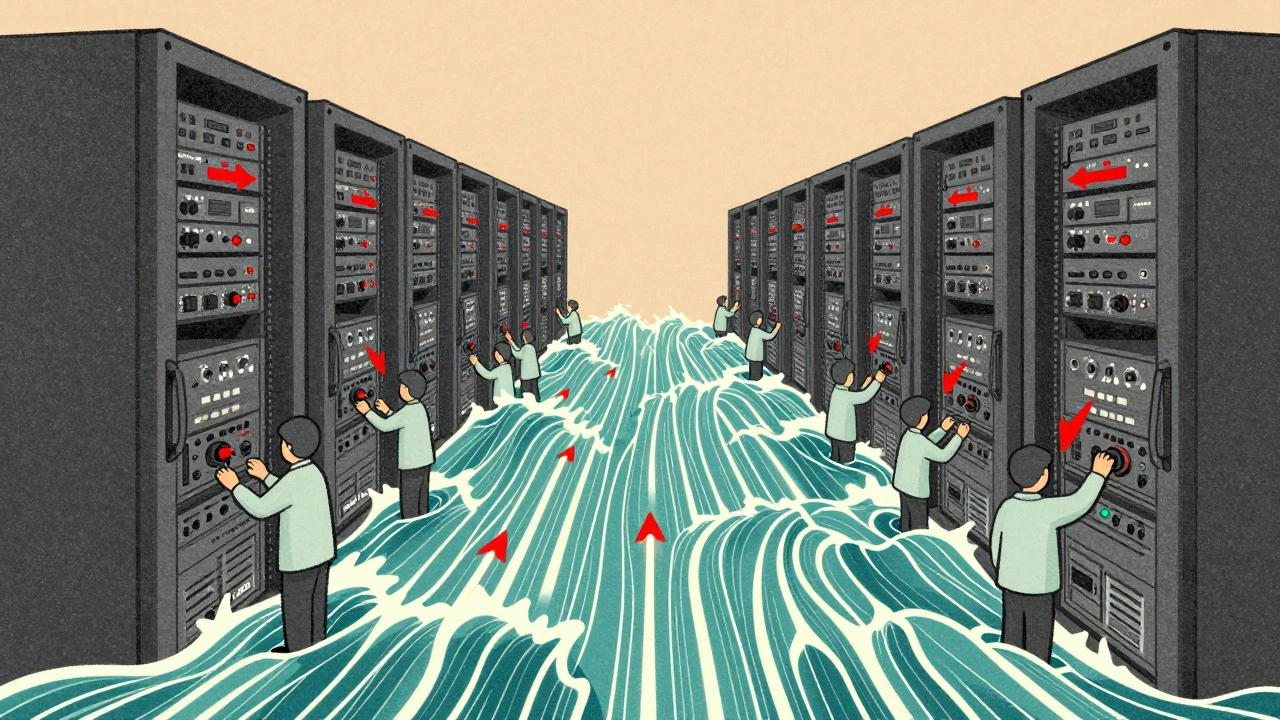

When you deploy a large language model, a powerful AI system trained on massive datasets to understand and generate human-like text. Also known as LLM, it can power chatbots, automate reports, and even write code—but only if you have the right LLM scaling policies, rules and practices that determine how, when, and where LLMs run under load. Without them, your costs spiral, responses slow down, and compliance teams start asking hard questions.

Scaling isn’t just about adding more servers. It’s about making smart trade-offs. For example, AI cost optimization, the practice of reducing cloud spending on LLMs without losing quality. Many teams burn cash running models 24/7, when usage spikes only happen during business hours. That’s where scheduling and spot instances come in. Others overuse the most expensive models when a smaller one could do the job—until error rates climb. That’s where model switching, the strategy of swapping between different LLMs based on task complexity and budget. makes sense. And if you’re handling customer data or regulated content, you can’t ignore governance KPIs, measurable metrics like policy adherence and mean time to resolve issues that track whether your AI is being used safely and legally. These aren’t optional checkmarks—they’re the difference between launching fast and getting fined.

What you’ll find below isn’t theory. These are real strategies used by teams shipping LLMs in production. You’ll see how companies cut cloud bills by 60% using autoscaling, how they track compliance with simple KPIs, how they avoid vendor lock-in by abstracting model providers, and how they keep AI outputs safe with moderation and redaction. Some posts dive into technical details like distributed training and confidential computing. Others focus on the human side: developer sentiment, legal risks, and how non-technical founders use AI without breaking anything. There’s no fluff. Just what works when your LLM goes from a demo to a critical system.

Autoscaling Large Language Model Services: Policies, Signals, and Costs

Learn how to autoscale LLM services effectively using prefill queue size, slots_used, and HBM usage. Reduce costs by up to 60% while keeping latency low with proven policies and real-world benchmarks.

Read More