LLM Inference Costs: How Much Does Running AI Models Really Cost?

When you run a large language model, a type of AI system that generates text by predicting the next word based on vast amounts of training data. Also known as generative AI, it powers chatbots, content tools, and automation—but every answer it gives costs money. This isn’t like paying for server hosting. It’s pay-per-token: every word you ask for, every character you get back, adds up fast. A single user asking a few questions can rack up charges that surprise even experienced teams.

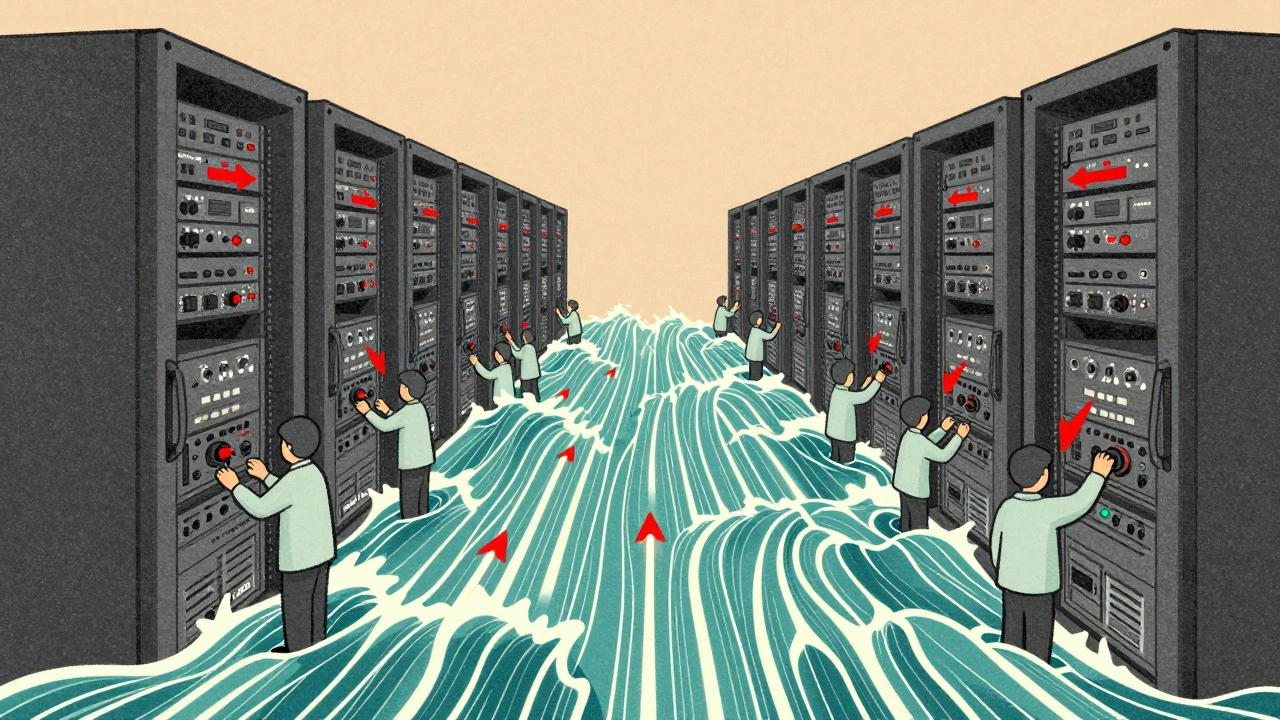

What makes LLM inference costs, the total expense of running a language model in production to answer user requests go up? Three things: token pricing, how much providers charge per thousand input or output tokens, the model size, larger models like GPT-4 Turbo use more compute and cost more per request, and how users behave. If people ask long, complex questions or keep the conversation going for minutes, the bill grows. Even small changes—like switching from GPT-3.5 to GPT-4—can triple your costs overnight.

And it’s not just the model. Where you run it matters. Cloud providers charge more for guaranteed speed, but you can slash costs by using spot instances, scheduling requests for off-peak hours, or switching to smaller models when you don’t need top-tier accuracy. Some teams save 60% just by optimizing when and how they call the API. Others use RAG, a method that lets smaller models answer questions using your own data, reducing the need for expensive full-model calls to cut down on usage.

You can’t ignore cloud AI costs, the total expense of running AI workloads on platforms like AWS, Azure, or Google Cloud. It’s not just the API fee—it’s the storage for your vectors, the compute for preprocessing, the monitoring tools, and the bandwidth. A single poorly designed chatbot can turn into a monthly $10,000 expense if no one’s watching the usage patterns.

But here’s the good news: you don’t need to be an AI expert to control this. Real teams are tracking usage by user, setting hard limits, and switching models dynamically based on question complexity. They’re using tools that auto-detect when a simple answer will do, and they’re testing cheaper open-source models that perform almost as well for specific tasks. The goal isn’t to avoid LLMs—it’s to use them smartly.

Below, you’ll find real breakdowns of how companies cut their LLM inference costs, what metrics actually matter on your bill, which models give the best value, and how to avoid the traps that turn a promising AI feature into a budget killer.

Autoscaling Large Language Model Services: Policies, Signals, and Costs

Learn how to autoscale LLM services effectively using prefill queue size, slots_used, and HBM usage. Reduce costs by up to 60% while keeping latency low with proven policies and real-world benchmarks.

Read More