Global AI: Cross-Border AI Systems, Regulations, and Deployment Challenges

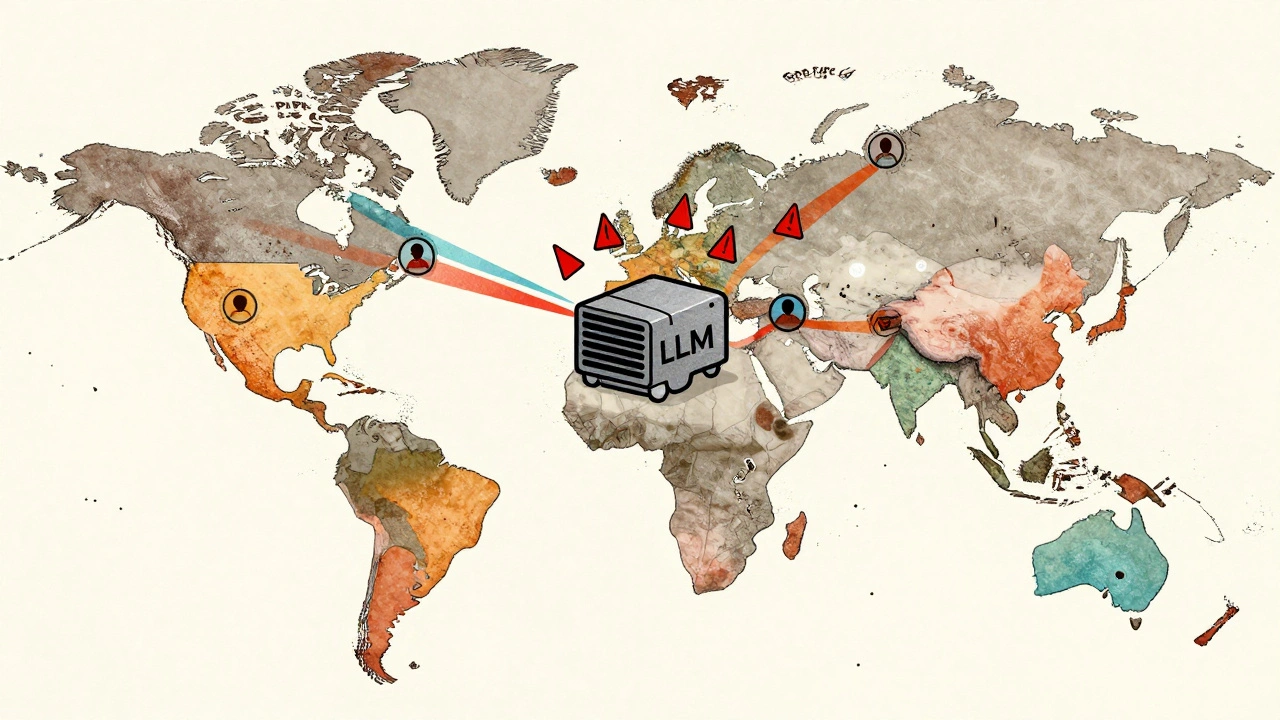

When we talk about global AI, artificial intelligence systems designed to operate across international borders with legal, technical, and cultural considerations. Also known as international AI, it isn’t just about running code in multiple countries—it’s about following different laws, protecting data in varied jurisdictions, and avoiding fines that can shut down entire product lines. Most companies think AI is a tech problem. It’s not. It’s a legal, logistical, and ethical minefield.

Take AI export controls, government rules that restrict which AI models, tools, or weights can be shared across national borders. In 2025, the U.S., EU, and UK all have different thresholds for what counts as a "high-risk" model. Send a model trained on European medical data to a team in Japan without proper documentation? You could be violating export laws—even if you didn’t mean to. Then there’s AI regulations, laws that force companies to disclose training data, get user consent, or block certain outputs. California requires transparency about AI-generated content. Illinois bans deepfakes in political ads. Colorado regulates AI in insurance underwriting. These aren’t suggestions. They’re enforceable rules with penalties up to 4% of global revenue.

And it’s not just governments. LLM deployment, the process of putting large language models into real-world systems like customer service bots or internal knowledge tools in a global setting means you’re also managing data residency, cloud regions, and encryption standards. You can’t just spin up a model on AWS in Virginia and call it done if your users are in Germany, Brazil, or Singapore. Each region has its own rules for where data can be stored, who can access it, and how long it can be kept. Even something as simple as logging user prompts might break privacy laws if you’re not careful.

That’s why AI compliance, the ongoing practice of ensuring AI systems meet legal, ethical, and operational standards across all markets isn’t a one-time audit. It’s a daily workflow. It means knowing which models are allowed where, how to handle data transfers legally, and how to respond when a country suddenly bans a type of AI. Companies that treat this as an afterthought end up with blocked APIs, frozen accounts, or lawsuits. Those that build it in from day one? They scale without surprises.

This collection doesn’t just list tools or code. It shows you the real-world trade-offs: how to design AI that works across borders without breaking laws, how to measure compliance with actual KPIs, how to avoid costly mistakes in multi-tenant SaaS apps, and how to use encryption and confidential computing to protect data even when it’s being processed. You’ll find deep dives on export controls, state-level U.S. laws, truthfulness benchmarks, and how blockchain and cryptography are being used to prove AI decisions are trustworthy. These aren’t theory pieces. They’re field reports from teams who’ve been fined, blocked, or saved by getting it right—or wrong.

Data Residency Considerations for Global LLM Deployments

Data residency rules for global LLM deployments vary by country and can lead to heavy fines if ignored. Learn how to legally deploy AI models across borders without violating privacy laws like GDPR, PIPL, or LGPD.

Read More