Generative AI Moderation: How to Keep AI Content Safe and Compliant

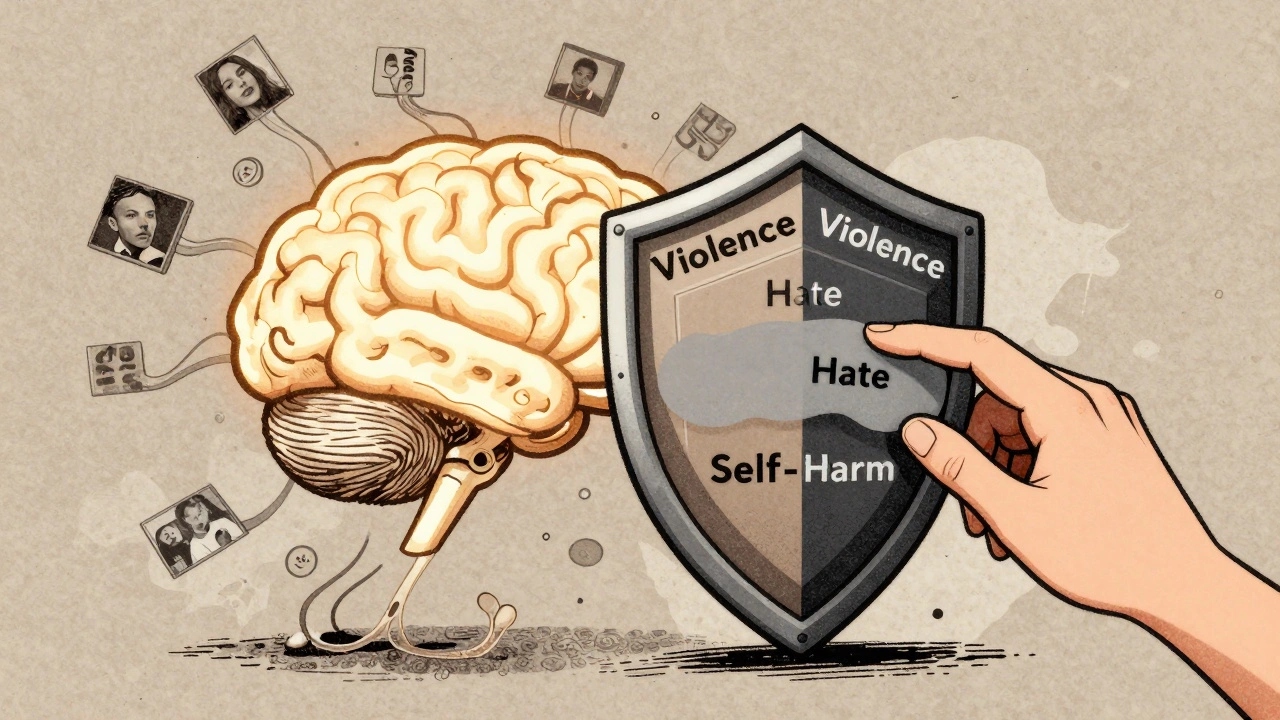

When you use generative AI moderation, the process of filtering, blocking, or flagging harmful, biased, or inaccurate outputs from AI systems. Also known as AI content filtering, it’s what stops your chatbot from spreading misinformation, your customer service bot from sounding offensive, or your marketing tool from generating illegal content. Without it, even the most advanced models can cause real harm—legal trouble, brand damage, or worse.

Generative AI moderation isn’t just about keywords. It’s about understanding AI hallucinations, when models make up facts that sound plausible but are completely false, and how they show up in your app. A model might say a company CEO was arrested—when they never were. Or generate hate speech disguised as humor. Tools like LLM filtering, systems that scan AI outputs in real time using rules, classifiers, or external APIs help catch these before they reach users. Many companies use OpenAI’s moderation endpoint, Google’s Content Safety API, or custom-trained classifiers tuned to their industry—healthcare, finance, or education—where mistakes cost more than just embarrassment.

And it’s not just about blocking bad content. Effective moderation also handles content compliance, adhering to laws like California’s AI transparency rules or EU’s AI Act that require disclosure, consent, or risk assessments. If your app generates images of people, you need to know if it’s creating deepfakes. If it writes legal disclaimers, you need to ensure they’re accurate. That’s why moderation now includes metadata tagging, audit trails, and human review workflows—not just automated filters.

What you’ll find here are real, tested approaches from developers who’ve been burned by AI going off the rails. You’ll see how to set up layered moderation—starting with simple keyword blocks, moving to neural classifiers, and adding human oversight where it matters. You’ll learn which tools work for SaaS apps, which ones scale for enterprise, and how to avoid the trap of false positives that frustrate users. This isn’t theory. These are the systems running in production right now, keeping businesses out of court and users safe.

Content Moderation for Generative AI: How Safety Classifiers and Redaction Keep Outputs Safe

Learn how safety classifiers and redaction techniques prevent harmful content in generative AI outputs. Explore real-world tools, accuracy rates, and best practices for responsible AI deployment.

Read More