Domain-Aware LLM: How AI Understands Your Specific Data and Context

When you ask a domain-aware LLM, a large language model trained to use your private data to answer questions accurately within a specific field. Also known as context-aware AI, it doesn't rely on general knowledge alone—it pulls from your documents, databases, or internal systems to give answers that actually matter to your business. This isn't just fancy wording. It’s the difference between an AI that guesses and one that knows.

A domain-aware LLM works by combining two things: the raw power of a big language model like GPT or Claude, and a way to connect it to your own information. That connection usually comes from retrieval-augmented generation, a technique where the AI searches your data before answering, pulling in facts from your documents instead of making them up. Without this, even the smartest models hallucinate—especially when you ask about your product specs, internal policies, or customer history. Tools like vector databases, systems that store and retrieve data based on meaning, not keywords. make this possible. They turn your PDFs, emails, and spreadsheets into searchable knowledge that the LLM can use in real time.

Why does this matter? Because generic AI is useless in real-world settings. A customer support bot that doesn’t know your return policy will frustrate users. A legal assistant that can’t cite your contract templates is a liability. A medical AI that ignores your hospital’s protocols is dangerous. A domain-aware LLM fixes all that. It’s not about making the model bigger—it’s about making it smarter with your data. And that’s exactly what the posts below cover: how to build these systems in PHP, how to keep them secure, how to cut costs without losing accuracy, and how to avoid the pitfalls most teams hit when they try to go from theory to real deployment.

You’ll find real examples here—how companies use RAG to cut support tickets by 70%, how to structure your data so the LLM actually understands it, and how to test whether your AI is truly domain-aware or just pretending. No fluff. No hype. Just what works when you’re building something that has to perform under real pressure.

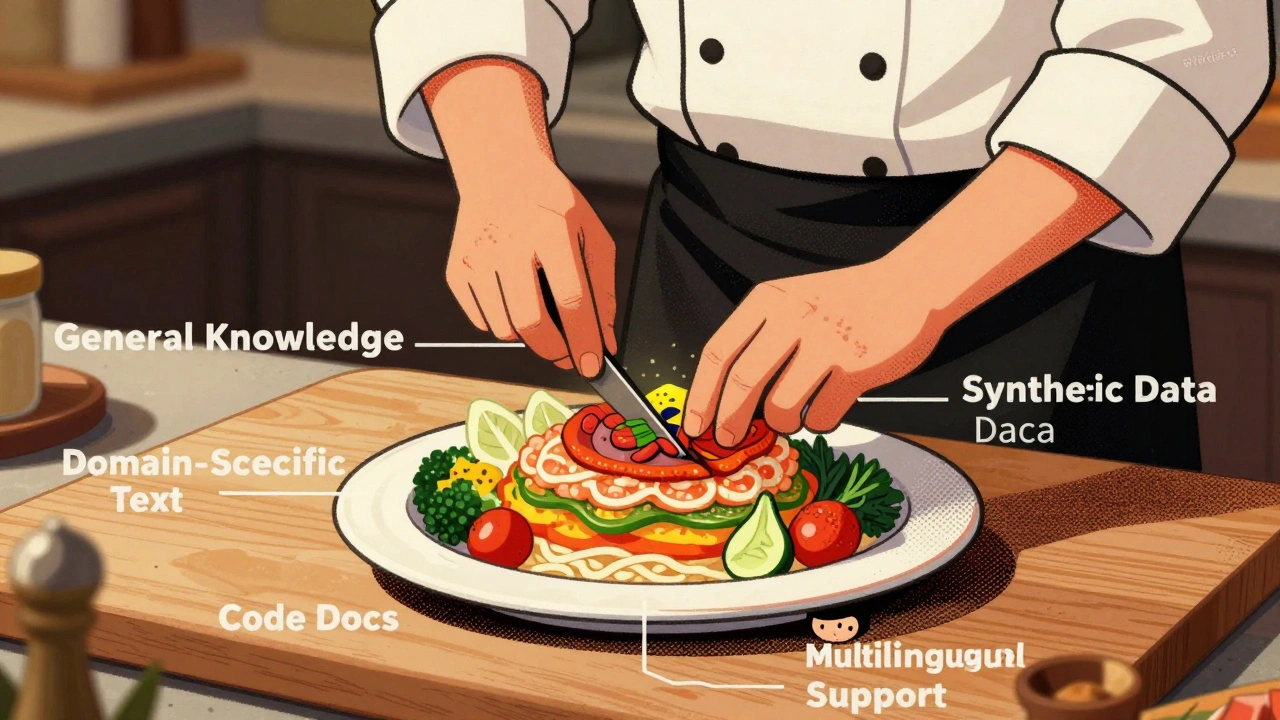

How to Build a Domain-Aware LLM: The Right Pretraining Corpus Composition

Learn how to build domain-aware LLMs by strategically composing pretraining corpora with the right mix of data types, ratios, and preprocessing techniques to boost accuracy while reducing costs.

Read More