Container Security: Protect Your PHP AI Apps from Runtime Threats

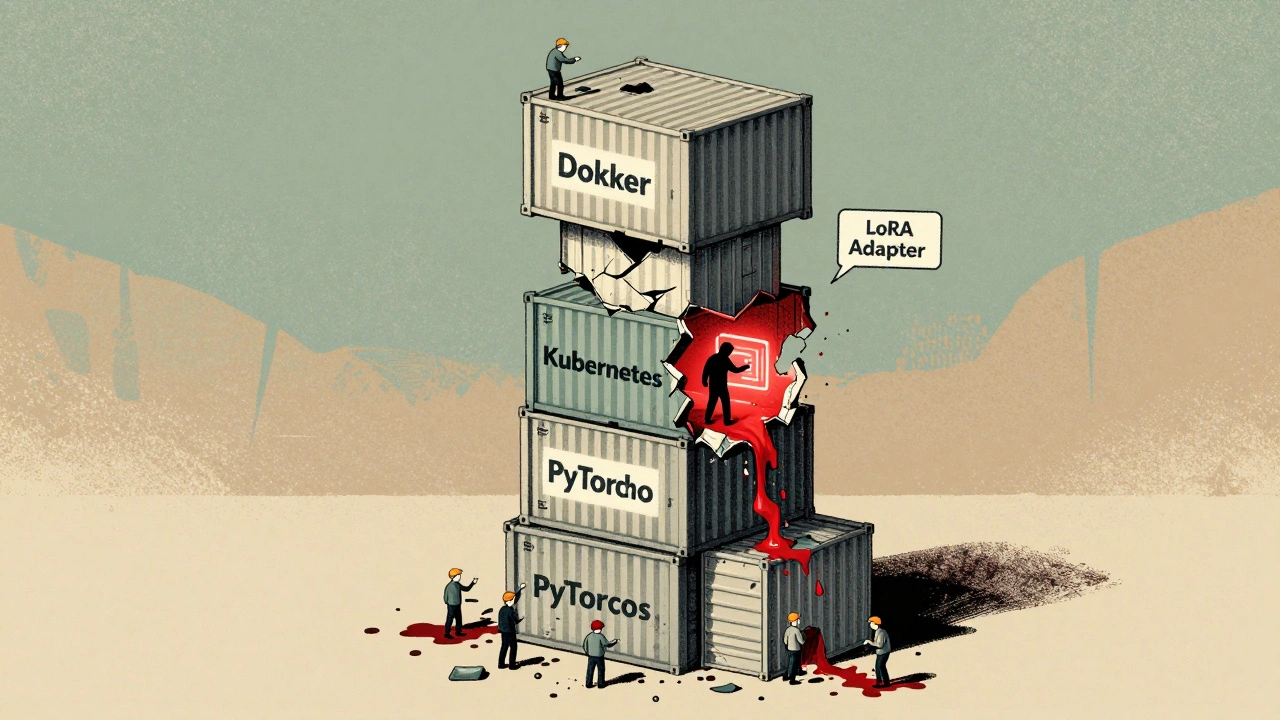

When you run AI-powered PHP apps in containers, you’re not just packaging code—you’re deploying a live system that can be attacked, exploited, or leaked. Container security, the practice of hardening Docker, Kubernetes, and other runtime environments to prevent unauthorized access, data theft, and code tampering. Also known as runtime security, it’s the invisible shield between your AI models and the outside world. Without it, even the most advanced LLM integration can be undone by a single misconfigured port, an outdated base image, or a privileged container running as root.

Most developers focus on writing better prompts or optimizing token costs, but skip the basics: who can access your container? What user permissions does it run under? Is it pulling code from untrusted registries? Docker security, a set of practices to lock down container images, reduce attack surface, and enforce least-privilege access. Tools like Trivy and Clair scan your images for known vulnerabilities before they even start. Kubernetes security, the layer that manages how containers communicate, scale, and isolate workloads in production. If you’re running multiple AI services in a cluster, network policies and pod security profiles aren’t optional—they’re your last line of defense against lateral movement.

AI models in containers often handle sensitive user data—chat logs, personal inputs, proprietary training snippets. A breach here doesn’t just break your app; it breaks trust. That’s why LLM deployment, the process of putting large language models into production with safeguards for data privacy and model integrity. must include container-level controls: encrypted volumes, read-only filesystems, and runtime monitoring for abnormal behavior like outbound connections to unknown IPs. You don’t need fancy AI tools to fix this—you need basic hygiene: don’t run as root, don’t expose ports unless necessary, and always update your base images.

What you’ll find below are real, battle-tested guides from developers who’ve seen containers get hacked, AI data leaked, and apps taken down by misconfigurations. These aren’t theory pieces—they’re checklists, code snippets, and hard-won lessons on how to lock down your PHP AI apps before someone else does.

Supply Chain Security for LLM Deployments: Securing Containers, Weights, and Dependencies

LLM supply chain security protects containers, model weights, and dependencies from compromise. Learn how to secure your AI deployments with SBOMs, signed models, and automated scanning to prevent breaches before they happen.

Read More