AI Financial Metrics: Track, Optimize, and Control AI Costs in Production

When you run AI financial metrics, quantifiable measures used to track the economic impact of deploying AI systems in real-world applications. Also known as AI cost KPIs, these metrics turn vague spending into clear business decisions. Most teams don’t realize their AI budget is leaking before they even see the first invoice. It’s not just about how many users you have—it’s about how many tokens they use, which model version they hit, and when they use it. A single user asking the same question at 3 AM versus 10 AM could cost you 4x more because of autoscaling rules you didn’t set.

LLM billing, the pricing model where you pay per input and output token processed by a large language model is the engine behind every AI financial metric. It’s not like cloud hosting, where you pay for server time. Here, you pay for every word the AI reads and writes. That means a customer asking for a 500-word report isn’t just using more compute—they’re burning through more tokens, and your bill grows linearly. Generative AI costs, the total expenses tied to running, scaling, and maintaining generative AI systems in production don’t just come from API calls. They come from failed prompts that trigger retries, unused models sitting idle, and poor caching that forces the same answer to be generated ten times. And if you’re using multiple providers? Without proper abstraction, you’re flying blind.

What’s the real problem? Most teams track usage but never connect it to outcomes. Did that 10,000-token spike last week come from a new feature? A bug? A bot scraping your API? Without token pricing, the cost structure where each token (word fragment) in an AI prompt or response is charged individually tied to user behavior, you’re guessing. The best teams now monitor per-user token consumption, peak demand cycles, and model-switching costs like they monitor server uptime. They know exactly when to switch from GPT-4 to a smaller model, when to throttle non-critical requests, and when to schedule batch processing to save 60% on cloud bills.

You don’t need a finance degree to manage AI costs—you need visibility. The posts below show you how real teams are measuring, cutting, and controlling AI spending. You’ll see how usage patterns drive billing, how autoscaling policies can make or break your budget, and how tools like LiteLLM help avoid vendor lock-in while keeping costs predictable. Whether you’re a founder watching your burn rate or a dev trying to prove AI is worth the spend, these guides give you the exact metrics, benchmarks, and tactics that work today.

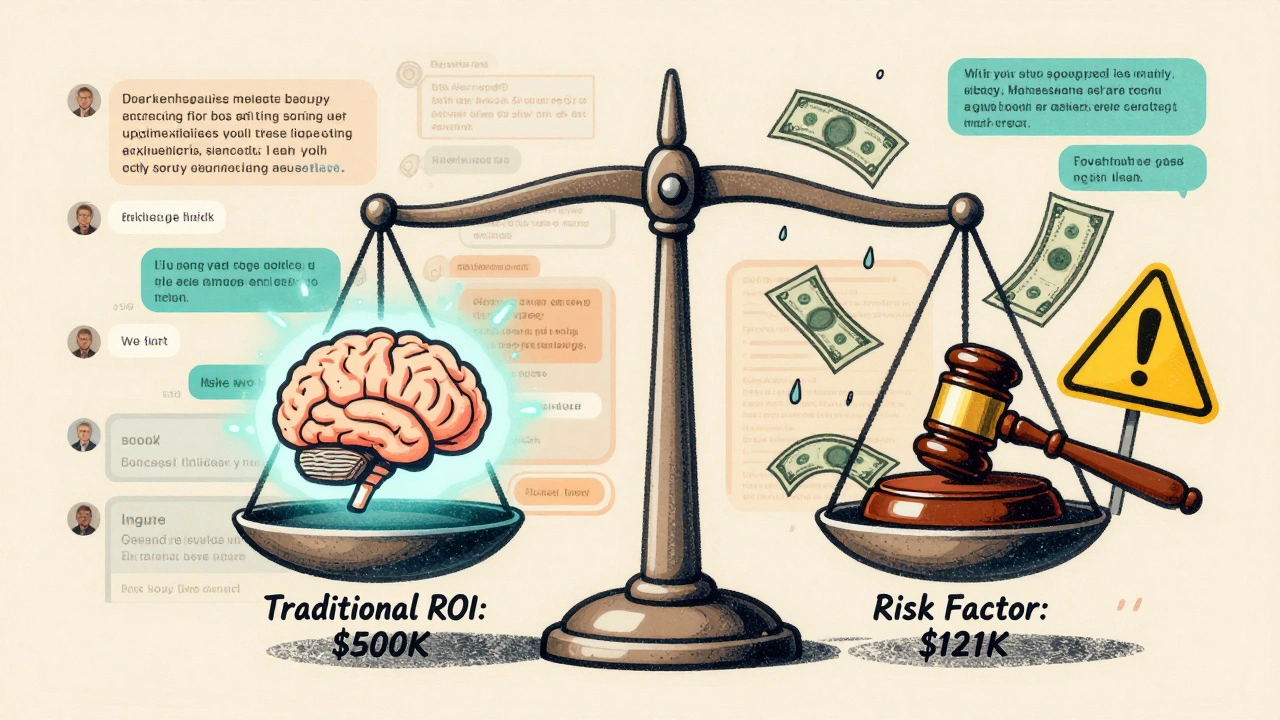

Risk-Adjusted ROI for Generative AI: How to Calculate Real Returns with Compliance and Controls

Risk-adjusted ROI for generative AI accounts for compliance costs, legal risks, and control measures to give you a realistic return forecast. Learn how to calculate it and avoid costly mistakes.

Read More