External APIs for AI: Connect PHP Apps to OpenAI, Claude, and More

When you build AI features in PHP, you’re not training models from scratch—you’re connecting to external APIs, remote services that let your PHP app use powerful AI models without running them locally. Also known as AI endpoints, these APIs let you send text, images, or data to services like OpenAI, Anthropic, or Mistral, and get smart responses back in real time. This is how small teams build chatbots, content filters, and automated workflows without needing a team of ML engineers.

But using external APIs, remote services that let your PHP app use powerful AI models without running them locally. Also known as AI endpoints, these APIs let you send text, images, or data to services like OpenAI, Anthropic, or Mistral, and get smart responses back in real time. isn’t just about making HTTP calls. It’s about managing costs, avoiding vendor lock-in, and handling failures gracefully. That’s why developers use tools like LiteLLM, a lightweight proxy that normalizes requests across different AI providers like OpenAI, Anthropic, and Cohere or LangChain, a framework for chaining AI calls, memory, and data retrieval to build smarter workflows. These aren’t just libraries—they’re insurance policies against sudden API price hikes or outages.

Most of the posts here focus on what happens after you connect: how usage patterns spike your bill, how to autoscale when traffic surges, how to keep data private during inference, and how to test if the AI is telling the truth. You’ll find guides on reducing cloud costs by 60% with spot instances, how to structure prompts so they don’t hallucinate, and how to monitor token usage in real time. There’s even advice on legal compliance—like export controls and state-level AI laws—that can catch you off guard if you’re shipping globally.

What ties all these posts together? Real-world PHP apps that rely on external APIs to do the heavy lifting. Whether you’re building a SaaS tool, a content moderation system, or a domain-specific assistant, your success depends less on your PHP code and more on how well you manage the connection to the AI behind it. Below, you’ll find practical, tested approaches—not theory, not hype—just what works when your app is live and users are counting on it.

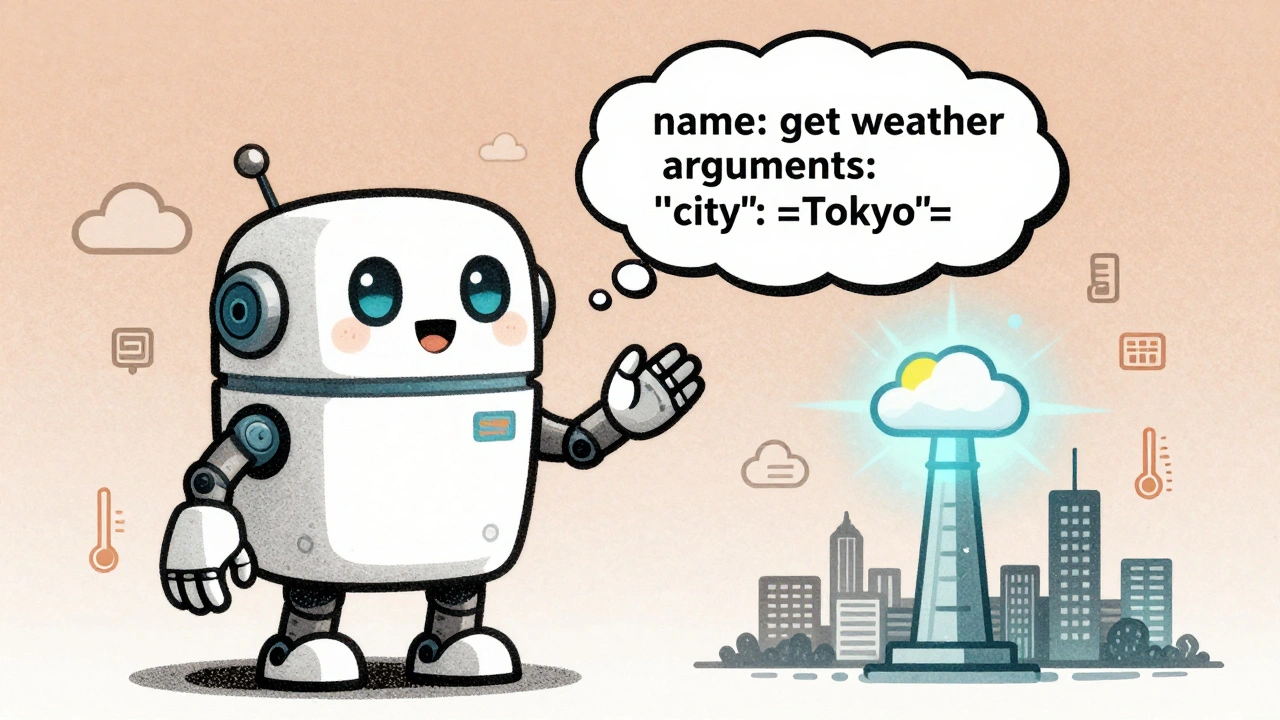

Tool Use with Large Language Models: Function Calling and External APIs Explained

Function calling lets large language models interact with real tools and APIs to access live data, reducing hallucinations and improving accuracy. Learn how it works, how major models compare, and how to build it safely.

Read More