Compliance for Global Teams: Legal AI Rules, Data Privacy, and Cross-Border Rules

When you build AI systems for teams spread across the world, compliance for global teams, the set of legal, ethical, and operational rules that ensure AI systems follow local laws across borders. Also known as international AI governance, it's not optional—it's the difference between launching safely and facing million-dollar fines. The EU’s AI Act, California’s privacy laws, and Brazil’s LGPD don’t just apply to your users—they apply to your code, your training data, and how you handle user inputs in real time.

That’s why enterprise data governance, the systems and policies that track, control, and audit how AI uses data inside an organization. Also known as AI data management, it becomes your backbone. Without it, you can’t prove you’re not using private employee emails to train your chatbot, or that your customer support AI isn’t accidentally storing PII in logs. Tools like Microsoft Purview and Databricks help, but they’re only as good as the rules you set. And those rules change by country. In Germany, you need explicit consent before processing any personal data. In Japan, you must disclose if an AI generated a response. In the U.S., states like Colorado and Illinois are passing laws that ban deepfakes in political ads—and your AI could be held responsible.

And it’s not just about data. generative AI laws, local regulations that require transparency, labeling, and safety controls for AI-generated content. Also known as AI disclosure rules, it forces you to ask: Who’s liable when your AI gives wrong medical advice? Can your team in India use the same model your team in France uses, if the training data came from U.S. sources? These aren’t theoretical questions. Companies are already getting fined for ignoring them. Risk-adjusted ROI isn’t just about profit—it’s about avoiding lawsuits, reputational damage, and shutdowns.

What you’ll find below isn’t theory. These posts show real strategies used by teams shipping AI products across borders. You’ll see how to measure policy adherence with KPIs, how to use confidential computing to protect data during inference, how to avoid vendor lock-in when your legal team demands specific cloud providers, and how to audit your supply chain so you know every model weight and container was scanned for vulnerabilities. There’s no one-size-fits-all fix. But there are proven ways to build systems that work legally, securely, and at scale—no matter where your team sits.

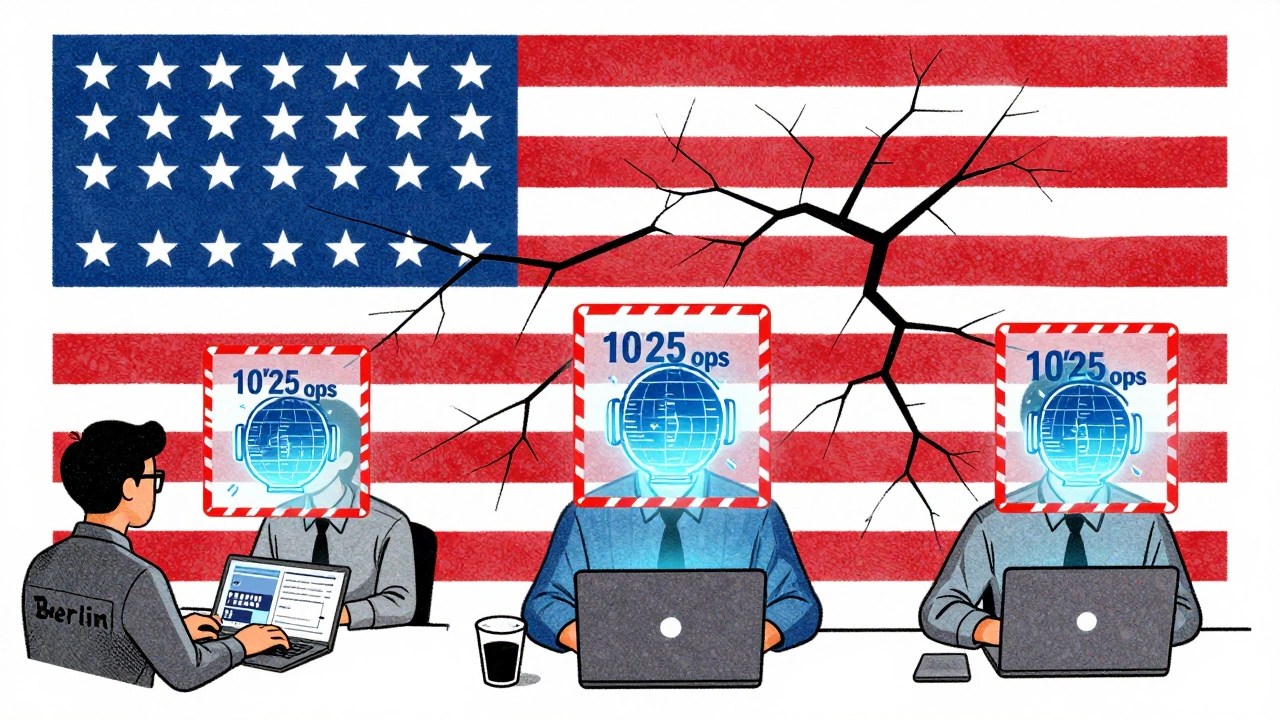

Export Controls and AI Models: How Global Teams Stay Compliant in 2025

In 2025, exporting AI models is tightly regulated. Global teams must understand thresholds, deemed exports, and compliance tools to avoid fines and keep operations running smoothly.

Read More