AI Model Compliance: Ensure Legal, Ethical, and Secure AI Deployments

When you deploy a large language model, you're not just running code—you're handling AI model compliance, the set of rules and practices that ensure AI systems are legal, transparent, and safe to use. Also known as AI governance, it’s what separates a prototype that works from a product you can actually ship. Without it, even the most accurate AI can get you sued, fined, or publicly shamed.

AI model compliance isn’t one thing—it’s a mix of data privacy, how you collect, store, and use training data to avoid violating laws like GDPR or CCPA, LLM governance, the internal policies and monitoring systems that track model behavior, bias, and output safety, and responsible AI, the ethical commitment to avoid harm, misinformation, and discrimination. These aren’t optional checklists. California’s AI law, for example, forces companies to disclose when AI generates content. Illinois bans deepfakes in political ads. The EU’s AI Act treats high-risk models like medical or hiring tools like medical devices—with audits and penalties.

You can’t just plug in OpenAI and hope for the best. If your app uses AI to answer customer questions, you need to know where the training data came from. If it’s making decisions about loans or job applications, you need to prove it’s not biased. If it’s generating text for public use, you need filters that catch harmful output before it goes live. That’s why companies are using tools like Microsoft Purview to track data lineage, deploying safety classifiers to block toxic responses, and using confidential computing to protect user data during inference. It’s not about being perfect—it’s about being accountable.

And it’s not just legal teams worrying about this. Developers, product managers, and founders all need to understand the stakes. A single leaked dataset or unchecked hallucination can cost millions in lawsuits, lost customers, or brand damage. The posts below show you exactly how real teams are handling this: how they measure policy adherence, audit training data, cut costs without cutting corners, and build systems that stay compliant even as laws change. You’ll see what works, what doesn’t, and how to avoid the mistakes that sink most AI projects before they even launch.

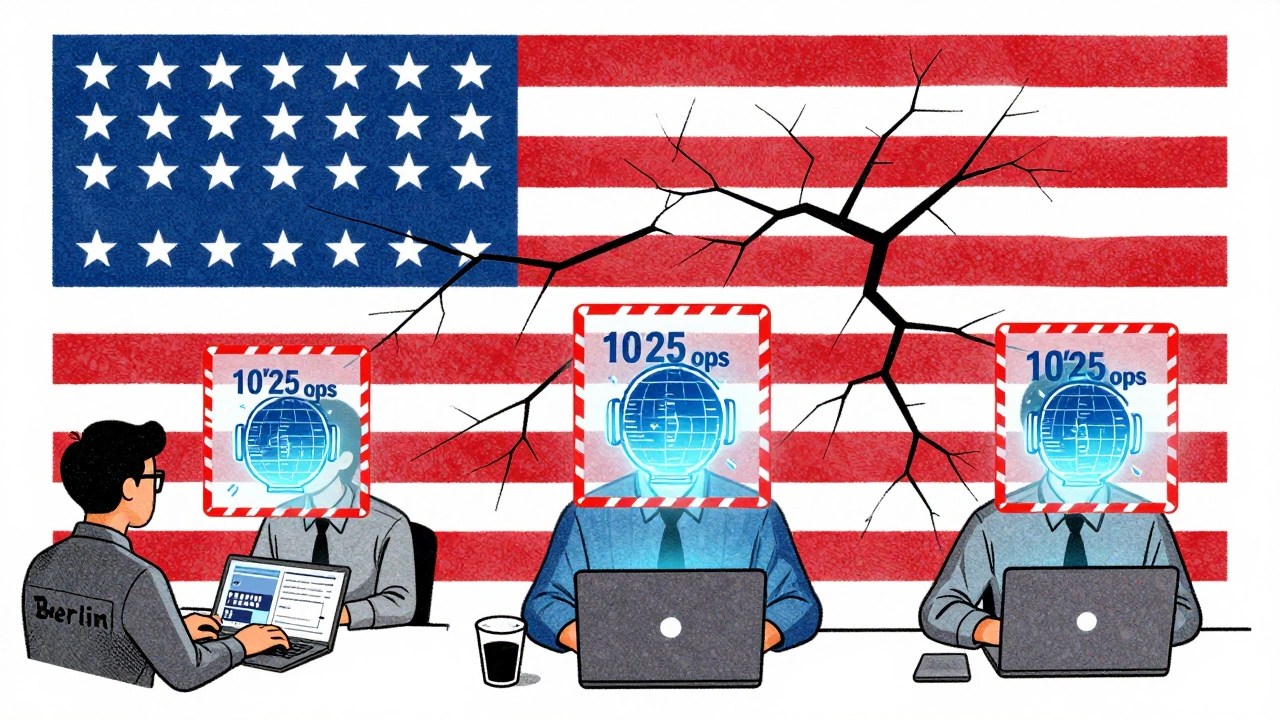

Export Controls and AI Models: How Global Teams Stay Compliant in 2025

In 2025, exporting AI models is tightly regulated. Global teams must understand thresholds, deemed exports, and compliance tools to avoid fines and keep operations running smoothly.

Read More